Connections

Connections define the databases that are available for DataMasque to run masking jobs against.

- Supported data sources

- Supported data types

- Add a new connection

- View and edit connection

- Delete connection

- Encrypted connections

- Required privileges

Supported data sources

DataMasque supports Oracle, Microsoft SQL Server, PostgreSQL, Amazon Redshift and MySQL databases.

| Databases | Versions |

|---|---|

| Oracle | 11gR2, 12gR1, 12gR2, 18c, 19c |

| Microsoft SQL Server | 2012, 2014, 2016, 2017, 2019 |

| Microsoft SQL Server (Linked Server)* | 2019 |

| PostgreSQL | 9.6, 10, 11, 12, 13 |

| Amazon Redshift | - |

| Amazon Aurora | - |

| Amazon RDS | - |

| MySQL | 5.7, 8.0 |

*Currently only the

mask_tabletask is supported on the data sources for MSSQL Linked Servers (DB2 and Sybase Adaptive Server Enterprise).joins are not supported for MSSQL Linked Servers. Note Linked Servers are not expected to be as performant as native database sources, tasks may take longer to complete.

Supported Data Types

Oracle data types

The following Oracle data types are supported.

| Data type | Description |

|---|---|

| char(n) | Fixed-length character data of 'n' size. |

| varchar2(n) | Variable-length character data of n size. |

| nvarchar2(n) | Variable-length character data of length n characters or bytes using national character set. |

| nchar(n) | Fixed-length character data of length n using national character set. |

| long | Variable-length character data. |

| float, number | Floating point number. |

| int | Whole 32 bit number. |

| date | Date not including time. |

| timestamp | Timestamp including both a date and a time. |

| timestamp with time zone | Timestamp including time zone. |

| timestamp with local time zone | Timestamp including local time zone. |

| xml type | XML data. |

Microsoft SQL Server data types

The following Microsoft SQL Server data types are supported.

| Data type | Description |

|---|---|

| char(n) | Fixed-length character data of n size. |

| varchar(n) | Variable-length character data of n size. |

| text | 32 bit string value. |

| nvarchar(n) | Variable-length character data of length n characters or bytes using national character set. |

| nchar(n) | Fixed-length character data of length n using national character set. |

| nvarchar2(n) | Variable-length character data of length n characters or bytes using national character set. |

| decimal(p,s) | Numerical value with p precision and s decimal places. |

| bigint | 64 bit integer value. |

| int | 32 bit integer value. |

| smallint | 16 bit integer value. |

| tinyint | 8 bit integer value. |

| float, real | Floating point number. |

| money | Currency value. |

| date | Date value with no time. |

| datetime | Date including a time. |

| datetimeoffset | Date and time, including time zone. |

| datetime2 | Date that is combined with a time of day based on 24-hour clock. |

| xml | XML data. |

Note: When attempting to mask

textdata type in Microsoft SQL Server, 2GB will be allocated for each text element when determining the maximum size of the element. To ensure that masking can run correctly, please ensure enough memory is available to the DataMasque instance.

PostgreSQL data types

The following PostgreSQL data types are supported.

| Data type | Description |

|---|---|

| char(n) | Fixed-length character data of n size. |

| varchar(n) | Variable-length character data of n size. |

| text | 32 bit string value. |

| decimal, numeric | Numerical value with decimal precision. |

| bigint, int8 | 64 bit integer value. |

| int, int4, integer | 32 bit integer value. |

| int2, smallint | 16 bit integer value. |

| float4, real | 32 bit numerical value with 6 decimal digits of precision. |

| double | 64 bit numerical value with 15 decimal digits of precision. |

| money | Currency value. |

| boolean | True or False. |

| date | Date value with no time. |

| timestamp | Timestamp including both a date and time. |

| timestamp with time zone | Timestamp including time zone. |

| json | For storing JSON (JavaScript Object Notation) data. Stores an exact copy of the input text. |

| jsonb | For storing JSON (JavaScript Object Notation) data. Stored in a decomposed binary format. |

| xml | XML data. |

Amazon Redshift data types

The following Amazon Redshift data types are supported.

| Data type | Description |

|---|---|

| char(n) | Fixed-length character data of n size. |

| nvarchar(n) | Variable-length character data of n size. |

| numeric, decimal | Numerical value with decimal precision. |

| int8, bigint | 64 bit integer value. |

| int, int4, integer | 32 bit integer value. |

| int2, smallint | 16 bit integer value. |

| float4, real | 32 bit numerical value with 6 decimal digits of precision. |

| float8, double precision | 64 bit numerical value with 15 decimal digits of precision. |

| bool, boolean | True or False. |

| date | Date value with no time. |

| timestamp | Timestamp including both a date and time. |

| timestamp with time zone | Timestamp including time zone. |

MySQL data types

The following MySQL data types are supported.

| Data type | Description |

|---|---|

| char(n) | Fixed-length character data of n size. |

| varchar(n) | Variable-length character data of n size. |

| text | 32 bit string value. |

| decimal, numeric | Numerical value with decimal precision. |

| tinyint | 8 bit integer value. |

| smallint | 16 bit integer value. |

| mediumint | 24 bit integer value. |

| int | 32 bit integer value. |

| bigint | 64 bit integer value. |

| float | 32 bit floating point value. |

| double | 64 bit floating point value. |

| date | Date value with no time. |

| datetime, timestamp | Date value with a time. |

| time | Time value. |

| json | For storing JSON (JavaScript Object Notation) data. |

MySQL

autoincrementprimary keys are not currently supported for themask_unique_keytask type.

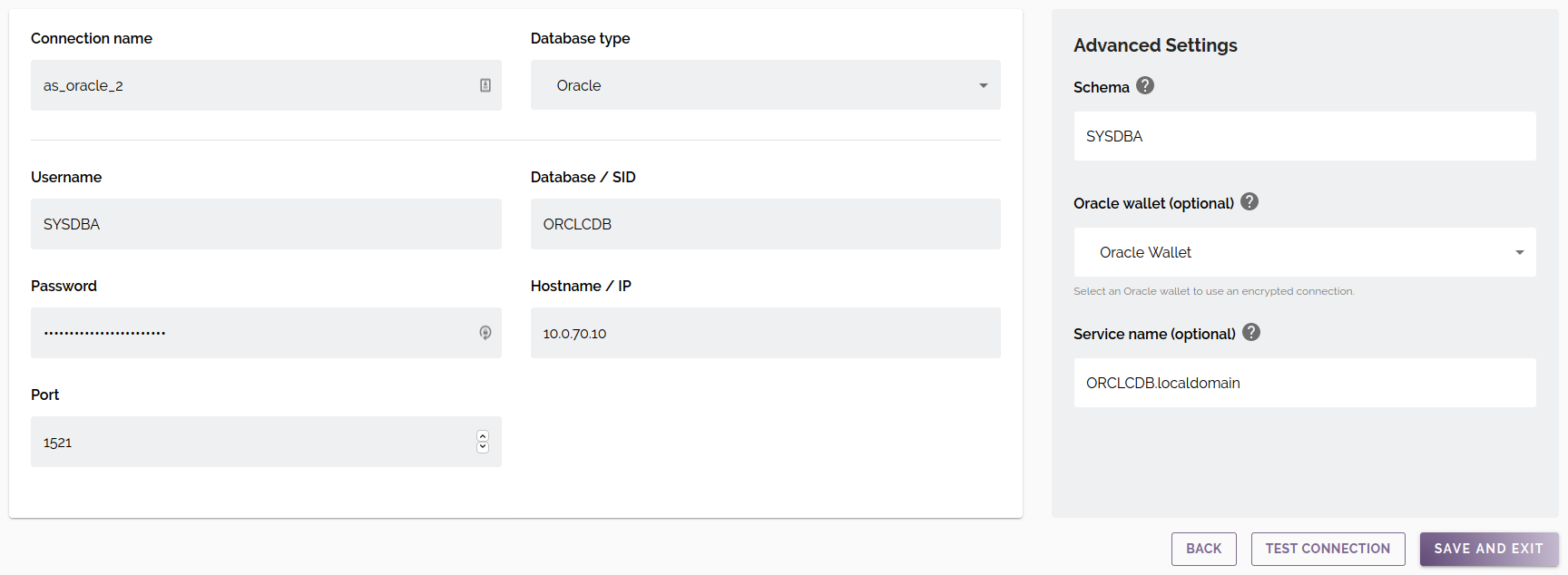

Add a new connection

To add a connection, click the ![]() button from Connections panel of the Database Masking Dashboard.

button from Connections panel of the Database Masking Dashboard.

Use the form to configure the connection parameters for your database. You may validate that the connection parameters are correct using the Test Connection button. This will attempt a database connection using the provided parameters. If the connection fails, an error will be shown with the failure details. The Test button is also available from the dashboard.

Connection Parameters

Standard parameters

| Connection name | A unique name for the connection. May only contain alphanumeric characters and underscores. |

| Database type | The type/engine of the database. Available options: Oracle, Microsoft SQL Server, Microsoft SQL Server (Linked Server), PostgreSQL, Amazon Redshift, MySQL. |

| Username | The username DataMasque will use to authenticate with the database server. |

| Password | The password DataMasque will use to authenticate with the database server. |

| Database / SID | The database name (or SID for Oracle databases). |

| Hostname / IP | The hostname or IP address of the database server. |

| Port | The port of the database server. |

Note: For AWS Aurora databases, use cluster endpoint for the

Database / SIDField.

Database Specific parameters

For Microsoft SQL Server (Linked Server) databases, the following field is required:

| Linked Server | The Identifier for the linked server to retrieve tables. The required format is: <LinkedServerName>.<DatabaseName>.<SchemaName> (where the SchemaName is synonymous with the username for DB2). E.g. DB2_LINK.TESTDB.DB2INST1 or SYBASE_LINK.master.dbo |

For Amazon Redshift databases, the following additional fields are required:

| S3 bucket name | Name of the S3 bucket to UNLOAD to and COPY from |

| Amazon Redshift IAM Role | ARN of an IAM Role that allows Amazon Redshift to access the S3 bucket |

Note: see the section Amazon Redshift for details on these fields.

Advanced parameters

For Oracle databases, the following fields are optional:

| Schema | The name of the schema that DataMasque will operate on. |

| Oracle wallet | Choose an Oracle wallet to enable encrypted connections. Oracle wallets can be uploaded using the Files interface. |

| Service name | The Oracle service name (overrides SID). Required for encrypted connections. |

For Microsoft SQL Server databases, the following field is optional:

| Instance | The instance name of the named database instance that DataMasque will connect to. |

Note: If a port number is specified in the connection, the value of the instance will be ignored when connecting. In order to connect to a named instance, the port number must be left blank. By default, named instances are configured on a dynamic port: it is best practise to use a non-default port where possible to minimise possible attack vectors.

For Postgres databases, the following field is optional:

| Schema | The name of the schema that DataMasque will operate on. |

For MySQL databases, the following field is optional:

| MySQL SSL Certificate | Choose a MySQL SSL Certificate to enable encrypted connections. These can be uploaded using the Files interface. |

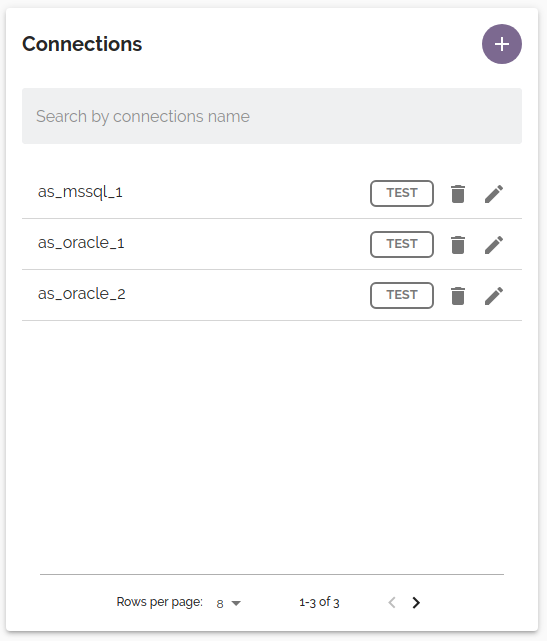

View and edit connection

To edit a connection, click the edit (![]() ) button for the connection you wish to edit in the Connections panel of the Database masking dashboard.

) button for the connection you wish to edit in the Connections panel of the Database masking dashboard.

A full list of connection parameters can be found under Connection Parameters.

Delete connection

To delete a connection, open the connection for editing (see View and edit connection) and click the Delete button. You will be prompted for confirmation before the connection is deleted.

A connection can also be deleted from the dashboard by clicking the trashcan icon.![]()

![]()

Encrypted connections

Oracle

For Oracle databases, encrypted connections require the use of an Oracle Wallet. Oracle Wallets for your databases can be uploaded and managed through the Files interface.

Once uploaded, the Oracle Wallet to use for a Connection can be chosen under advanced parameters.

Supported Oracle Wallet certificate signature algorithms

DataMasque recommends signing the Oracle Wallet certificate using an RSA CA key with one of the following signing

algorithms: sha256, sha384, or sha512. The sha1 algorithm is supported, but not recommended as it is insecure.

The md5 algorithm is unsupported.

Oracle Wallets signed with ECC keys are currently unsupported, however support will be added in future releases of DataMasque.

Note: the default

sign_algismd5in oracle 11g orapki/wallet manager, so it is recommended to explicitly specifysign_algwithsha256,sha384orsha512.

Microsoft SQL Server

DataMasque supports encrypted connections to Microsoft SQL Server instances that have the 'Force Protocol Encryption' option enabled. No additional configuration is required in DataMasque - encryption will be initiated by the server.

PostgreSQL

DataMasque supports encrypted connections to PostgreSQL instances that have the 'SSL mode' option enabled. No additional configuration is required in DataMasque - DataMasque will use encrypted connections with SSL mode preferred.

Note: DataMasque currently doesn't support

sslmode=verify-caorsslmode=verify-fullfor Postgres. This is on our roadmap and will be available in a future release.

Amazon Redshift

DataMasque supports encrypted connections to Amazon Redshift databases. Use the sslmode connection option to

control the connection encryption. No additional configuration is required in DataMasque.

Note: DataMasque doesn't support

sslmode=verify-caorsslmode=verify-fullfor Amazon Redshift currently. This is on our roadmap and will be included in future releases.

MySQL

DataMasque supports encrypted connections to MySQL databases. Encrypted connections require the use of MySQL SSL Certificates. These certificates can be uploaded through the Files interface.

Certificates must be uploaded as a .zip file containing the following file(s).

| File Name | Description |

|---|---|

| ca.pem | The Certificate Authority (CA) file. |

| client-cert.pem (optional) | The client public key certificate file. |

| client-key.pem (optional) | The client private key file. |

Depending on the server configuration, client-cert.pem and client-key.pem may not be required. For example, AWS RDS

requires only the Certificate Authority.

For more information on configuring MySQL to use encrypted connections, see here: https://dev.mysql.com/doc/refman/8.0/en/using-encrypted-connections.html.

Required privileges

DataMasque connections require the following database privileges to perform masking tasks.

Note: Run SQL tasks may require additional privileges, make sure you have granted the right privileges for the connection user to run the scripts.

Oracle connections

A DataMasque connection for Oracle requires:

CREATE SESSION,ALTER SESSION,UNLIMITED TABLESPACEandCREATE TABLEsystem privileges.SELECTonDBA_TABLES,DBA_INDEXES,DBA_CONSTRAINTS,DBA_DATA_FILES,DBA_TAB_COLUMNS,V_$DATABASEandV_$SESSION.

GRANT CREATE SESSION, ALTER SESSION, UNLIMITED TABLESPACE, CREATE TABLE TO <connection user>;

GRANT SELECT ON DBA_TABLES TO <connection user>;

GRANT SELECT ON DBA_INDEXES TO <connection user>;

GRANT SELECT ON DBA_CONSTRAINTS TO <connection user>;

GRANT SELECT ON DBA_DATA_FILES TO <connection user>;

GRANT SELECT ON DBA_TAB_COLUMNS TO <connection user>;

GRANT SELECT ON DBA_CONS_COLUMNS TO <connection user>;

GRANT SELECT ON V$DATABASE TO <connection user>;

GRANT SELECT ON V$SESSION TO <connection user>;

If the connection user is not the schema owner:

SELECT,ALTERandUPDATEprivileges to the target table(s).

GRANT SELECT, ALTER, UPDATE ON <target tables> TO <connection user>;

Note:

For

build_temp_tabletasks, you will require additional privileges on the target schema in order to create temporary tables:SELECT ANY TABLE,UPDATE ANY TABLE,CREATE ANY TABLE,ALTER ANY TABLEandDROP ANY TABLEsystem privileges.For

truncate_tabletasks, when the connection user is not the owner of the target tables, you will requireDROP ANY TABLEsystem privilege.For

mask_unique_keytasks, when the connection user is not the owner of the target tables, you will requireCREATE ANY TABLEandDROP ANY TABLEsystem privileges.

Microsoft SQL Server connections

A DataMasque connection for Microsoft SQL Server requires:

CONNECTandCREATE TABLEpermissions.SELECT,UPDATE,INSERT,ALTERandREFERENCESpermissions to the target schema.Ensure the connection user has the default schema set to the target schema for masking.

USE <target database>;

GRANT CONNECT, CREATE TABLE TO <connection user>;

GRANT SELECT, UPDATE, INSERT, ALTER, REFERENCES ON SCHEMA::<target schema> TO <connection user>;

ALTER USER <connection user> WITH DEFAULT_SCHEMA = <target schema>;

PostgreSQL connections

A DataMasque connection for PostgreSQL requires:

CONNECTandTEMPORARYprivileges to the target database.Ensure the connection user is a direct or indirect member of the role that owns the target tables(s).

SELECTprivilege onALL TABLES IN SCHEMA PG_CATALOGandINFORMATION_SCHEMA.COLUMNS.USAGEandCREATEprivileges to the target schema.Ensure the connection user has visibility (in their schema search path) of all tables that will be masked.

GRANT CONNECT, TEMPORARY ON DATABASE <target database> to <connection user>;

GRANT <target table owner role> TO <connection user>;

GRANT SELECT ON INFORMATION_SCHEMA.TABLES TO <connection user>;

GRANT SELECT ON INFORMATION_SCHEMA.COLUMNS TO <connection user>;

GRANT SELECT ON ALL TABLES IN SCHEMA PG_CATALOG TO <connection user>;

GRANT USAGE, CREATE ON SCHEMA <target schema> TO <connection user>;

ALTER USER <connection user> IN DATABASE <target database> SET SEARCH_PATH TO <target schema>;

GRANT SELECT ON INFORMATION_SCHEMA.TABLE_CONSTRAINTS TO <connection user>;

GRANT SELECT ON INFORMATION_SCHEMA.KEY_COLUMN_USAGE TO <connection user>;

GRANT SELECT ON INFORMATION_SCHEMA.CONSTRAINT_COLUMN_USAGE TO <connection user>;

MySQL connections

A DataMasque connection for MySQL requires:

CREATE TEMPORARY TABLESprivileges to the database.CREATE,DROP,INDEX,SELECT,UPDATE,INSERT,ALTERandREFERENCESprivileges to the target tables.

GRANT CREATE TEMPORARY TABLES ON <target database>.* TO <connection user>@<DataMasque host>;

GRANT CREATE, SELECT, UPDATE, INSERT, ALTER, REFERENCES ON <target database>.<target tables> TO <connection user>@<DataMasque host>;

Amazon Redshift connections

Database privileges

A DataMasque connection for Redshift requires that the user is the owner of the target tables, and:

TEMPORARYprivileges to the target database.Ensure the connection user is a direct or indirect member of the user that owns the target tables(s).

SELECTprivilege onALL TABLES IN SCHEMA PG_CATALOGandINFORMATION_SCHEMA.COLUMNS.USAGEandCREATEprivileges to the target schema.Ensure the connection user has visibility (in their schema search path) of all tables that will be masked.

GRANT TEMPORARY ON DATABASE <target database> to <connection user>;

GRANT <target table owner user> TO <connection user>;

GRANT SELECT ON INFORMATION_SCHEMA.TABLES TO <connection user>;

GRANT SELECT ON INFORMATION_SCHEMA.COLUMNS TO <connection user>;

GRANT SELECT ON ALL TABLES IN SCHEMA PG_CATALOG TO <connection user>;

GRANT USAGE, CREATE ON SCHEMA <target schema> TO <connection user>;

ALTER USER <connection user> SET SEARCH_PATH TO <target schema>;

GRANT SELECT ON INFORMATION_SCHEMA.TABLE_CONSTRAINTS TO <connection user>;

GRANT SELECT ON INFORMATION_SCHEMA.KEY_COLUMN_USAGE TO <connection user>;

GRANT SELECT ON INFORMATION_SCHEMA.CONSTRAINT_COLUMN_USAGE TO <connection user>;

Additional configurations

For masking on Amazon Redshift, DataMasque requires:

- An S3 bucket that is not public, with Object Lock disabled.

- An IAM role with an access policy attached to allow the Amazon Redshift database to access the S3 bucket.

- A dedicated AWS user with programatic access configured and access policy attached that allows DataMasque to access the S3 bucket.

Configuring Amazon S3 bucket

Access control

DataMasque uses Amazon S3 buckets as a staging area for processing Amazon Redshift database data. Therefore, it is important to restrict access on the S3 bucket you configure to use on your Amazon Redshift connection.

- Blocks public access, follow this link to enable Block Public Access feature on your S3 bucket.

- Apply access control to your S3 bucket. Follow this link for the steps to secure your S3 bucket.

Disable object lock

Object Lock must be disabled on your S3 bucket to allow DataMasque to clean up staging data when masking is completed. If Object Lock is not disabled, staging data will be left in your S3 bucket and a warning message will be included in the run log.

Optional configurations

The following configurations are not required, but recommended:

- Configure S3 buckets with SSE-S3, server side encryption.

- Disable Bucket Versioning.

Configuring Amazon Redshift database

Configuring access to S3 Bucket

Your Amazon Redshift database must have read-write access to the S3 bucket configured on your connection.

Follow the steps below to configure the Amazon Redshift database that will be accessing your S3 bucket:

- Open the Amazon IAM console, create an IAM policy. The following example IAM policy grants the required privileges to the test bucket:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "statement1",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:AbortMultipartUpload",

"s3:DeleteObject",

"s3:ListMultipartUploadParts"

],

"Resource": "arn:aws:s3:::test/*"

},

{

"Sid": "statement2",

"Effect": "Allow",

"Action": [

"s3:GetBucketPublicAccessBlock",

"s3:GetBucketPolicyStatus",

"s3:GetBucketObjectLockConfiguration",

"s3:GetEncryptionConfiguration",

"s3:ListBucketMultipartUploads",

"s3:ListBucket",

"s3:GetBucketAcl"

],

"Resource": "arn:aws:s3:::test"

}

]

}

- Create an IAM role:

- Select

AWS serviceas the trusted identity,Redshiftas the service andRedshift - Cutomizableas the use case. - Attach the policy created from the previous step to the role.

- Open the Amazon Redshift console and associate the IAM role to your Amazon Redshift database.

Reserve storage space for data processing

DataMasque requires additional storage on the database for creating staging tables when masking data. It is crucial to have sufficient storage space on your Amazon Redshift database to accommodate the total size of the tables you will be masking in parallel.

Configuring DataMasque's access to S3 bucket

DataMasque must be able to access the S3 bucket provided. A Profile that grants access to the bucket must be attached to the EC2 instance running DataMasque.

Follow the steps below to configure your DataMasque to access your S3 bucket:

- Open the Amazon IAM console and create an IAM policy. The following example IAM policy allows access to the test bucket and its contents:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "statement1",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:AbortMultipartUpload",

"s3:DeleteObject",

"s3:ListMultipartUploadParts"

],

"Resource": "arn:aws:s3:::test/*"

},

{

"Sid": "statement2",

"Effect": "Allow",

"Action": [

"s3:GetBucketPublicAccessBlock",

"s3:GetBucketPolicyStatus",

"s3:GetBucketObjectLockConfiguration",

"s3:GetEncryptionConfiguration",

"s3:ListBucketMultipartUploads",

"s3:ListBucket",

"s3:GetBucketAcl"

],

"Resource": "arn:aws:s3:::test"

}

]

}

- Create an IAM role:

- Select

AWS serviceas the trusted identity,EC2as the service andEC2underSelect your use case. - Attach the policy created from the previous step to the role.

Attach the Amazon IAM role created to the target DataMasque instance. You can use the following AWS command to attach an IAM role to an existing EC2 instance.

aws ec2 associate-iam-instance-profile --instance-id <ec2 instance id> --iam-instance-profile Name=<name of IAM role>Click here for more information on this command.

Amazon RDS connections

Oracle (AWS RDS)

A DataMasque connection from an AWS RDS Oracle instance requires the following permissions executed as an admin user:

GRANT CREATE SESSION, ALTER SESSION, CREATE TABLE TO <connection user>;

ALTER USER <connection user> QUOTA UNLIMITED ON <target data tablespace>;

ALTER USER <connection user> QUOTA UNLIMITED ON <temp tablespace>;

EXECUTE rdsadmin.rdsadmin_util.grant_sys_object( p_obj_name => 'DBA_TABLES',p_grantee => '<connection user>', p_privilege => 'SELECT');

EXECUTE rdsadmin.rdsadmin_util.grant_sys_object( p_obj_name => 'DBA_INDEXES',p_grantee => '<connection user>', p_privilege => 'SELECT');

EXECUTE rdsadmin.rdsadmin_util.grant_sys_object( p_obj_name => 'DBA_CONSTRAINTS',p_grantee => '<connection user>', p_privilege => 'SELECT');

EXECUTE rdsadmin.rdsadmin_util.grant_sys_object( p_obj_name => 'DBA_DATA_FILES',p_grantee => '<connection user>', p_privilege => 'SELECT');

EXECUTE rdsadmin.rdsadmin_util.grant_sys_object( p_obj_name => 'DBA_TAB_COLUMNS',p_grantee => '<connection user>', p_privilege => 'SELECT');

EXECUTE rdsadmin.rdsadmin_util.grant_sys_object( p_obj_name => 'DBA_CONS_COLUMNS',p_grantee => '<connection user>', p_privilege => 'SELECT');

EXECUTE rdsadmin.rdsadmin_util.grant_sys_object( p_obj_name => 'V_$DATABASE',p_grantee => '<connection user>', p_privilege => 'SELECT');

EXECUTE rdsadmin.rdsadmin_util.grant_sys_object( p_obj_name => 'V_$SESSION',p_grantee => '<connection user>', p_privilege => 'SELECT');

Check the AWS RDS Oracle privileges documentation for more information related to AWS RDS Oracle privileges.

Microsoft SQL Server (AWS RDS)

A DataMasque connection to an AWS RDS Microsoft SQL Server requires the same statements to set up the privileges as a normal Microsoft SQL Server connection. Please refer to MSSQL connections for more information. The AWS RDS for SQL Server Documentation has more information specifically on managing AWS RDS Microsoft SQL Server users and roles.

PostgreSQL (AWS RDS)

A DataMasque connection to an AWS RDS PostgreSQL instance requires the same statements to set up the privileges as a normal PostgreSQL connection. Please refer to PostgreSQL connections for more information. The AWS PostgreSQL Users and Roles article has more information specifically on managing AWS RDS PostgreSQL users and roles.

MySQL (AWS RDS)

A DataMasque connection to an AWS RDS MySQL instance requires the same statements to set up the privileges as a normal MySQL connection. Please refer to MySQL connections for more information. The AWS RDS for MySQL Documentation has more information specifically on managing AWS RDS MySQL users and roles.