Ruleset YAML specification

- Ruleset YAML specification

- Database task types

- Masking files

- File task types

- Masking tables

- Definitions

- Advanced ruleset features

- Common regular expression patterns

Ruleset YAML specification

The ruleset YAML configuration provides instructions that DataMasque will follow when performing a masking run against a target database. Rulesets are comprised of one or more tasks, which can contain many different types of instructions. The most common use case is the application of tasks for masking sensitive data in database tables with masking rules.

Ruleset Properties

The following properties are specified at the top-level of a ruleset YAML file:

version(required): The schema version used by this ruleset. The default value present in the editor when creating a new ruleset is generally the value that you should be using. See Schema Versioning for more information.name(deprecated; will be removed in release 3.0.0): A unique name that will be used to refer to your ruleset. This field may only contain alphanumeric characters and underscores, and its length is limited to 64 characters.tasks(required): A list of tasks to be performed in order on the target database. See Database Task Types for the comprehensive list of the available task types and their associated parameters.task_definitions(optional): A list of task definitions to be referenced from the ruleset's list oftasksthrough YAML anchors and aliases.rule_definitions(optional): A list of rule definitions to be referenced from a list of rules through YAML anchors and aliases.mask_definitions(optional): A list of maks definitions to be referenced from a list of masks through YAML anchors and aliases.skip_defaults(optional): See Default values to skip.random_seed(optional): Deprecated in favour of the Run secret option. See Freezing random values.

Example

The following ruleset provides an example for replacing the last_name column of every

row in the users table with the fixed value "redacted last name":

# My ruleset.yml

version: '1.0'

tasks:

- type: mask_table

table: users

key: user_id

rules:

- column: last_name

masks:

- type: from_fixed

value: 'redacted last name'

Note: The tasks must be indented using two spaces. For example:

tasks: - type: mask_table ^^

- type: mask_tableis indented two spaces from the parenttasks:

Important note on case-sensitivity: For all tasks except

run_sql, database identifiers, such as table and column names, should be referenced as you would otherwise reference them in an SQL query. When masking a case-sensitive database, identifiers must be referenced in the ruleset using the correct case.To refer to a case-sensitive table or column in a database, the identifier must be enclosed in double quotation marks. However, when writing YAML, quotations are used to denote a string value, so any enclosing quotations are not considered as part of the value. As such, it is necessary to enclose the entire name - including double quotation marks - in an outer set of single quotation marks. For example:

# Case-sensitive table name; enclosed in both single and double quotations. table: '"CaseSensitiveTableName"'To refer to a case-sensitive table in a schema, the schema name must also be enclosed in quotation marks if the schema name is case-sensitive. The entire combination of schema and table name must be enclosed in single quotation marks. For example:

# Case-sensitive schema and table name; enclosed in both single and double quotations. table: '"CaseSensitiveSchemaName"."CaseSensitiveTableName"'If referencing a combination of table and column, you will need have quotation marks around both the table and column names within the surrounding quotation marks. For example:

# Case-sensitive table and column name; enclosed in both single and double quotations. column: '"CaseSensitiveTable"."CaseSensitiveColumn"'Identifier names containing double quotation marks, backslashes, periods, and whitespace should always be enclosed in double quotation marks. Also, literal double quotation marks and backslashes must be preceded by a backslash:

# Case-sensitive table and column name containing special characters. column: '"Case\"Sensitive\\Table"."Case.Sensitive Table"'Note: Backslashes and single quotation marks are not supported in

identifier names for Microsoft SQL Server (Linked Server) databases.

Schema versioning

Schema changes to the DataMasque ruleset specification are tracked using the version field of

the ruleset. The version number consists of two fields in the format major.minor. Minor version

increments reflect backwards-compatible changes to the schema, whereas major version increments

represent breaking changes which will require some form of migration from previous versions. Wherever

possible, DataMasque will handle such migrations for you automatically when you upgrade.

Each release of DataMasque only supports the most recent major ruleset version at the time of release. As such, the major schema version of your rulesets must equal the major version supported by your DataMasque release. The minor schema version of your rulesets must be equal to or less than the minor version supported by your DataMasque release.

The ruleset schema version supported by this release of DataMasque is "1.0".

Writing comments

While creating a ruleset, it is possible to write comments in the ruleset. A commented block is skipped during execution, and it helps to add description for specified ruleset block.

If you begin a line with # (hash symbol), all text on that line will become a comment.

version: '1.0'

# This line will become a comment.

tasks:

- type: mask_table

If you place # on a line, all text after that # on that line will become a comment. Any text before it

will still be part of the ruleset.

version: '1.0'

tasks:

- type: mask_table # The name of this task type will not be affected by this comment.

In the DataMasque ruleset editor, the shortcut key combination for commenting ruleset blocks is CTRL + /

on Linux and Windows and ⌘ + / for Mac operating systems. If your cursor is on a line and this shortcut

is used, the entire line will be commented out. Highlighting multiple lines at once will cause all

highlighted lines to be commented out.

Database task types

A ruleset is comprised of a list of tasks to be performed in sequence on

the target database. Tasks are performed serially from top to bottom,

but special serial and parallel tasks can be used to nest other tasks

within them for performance (parallelism) or dependency management.

Sensitive data discovery

The run_data_discovery task type inspects the metadata of your database, searching for columns

which are likely to contain sensitive data. On completion, a report is generated containing a

summary of all identified columns, and their current masking coverage. It is recommended to include

a single run_data_discovery task in your rulesets to help ensure complete masking coverage and

provide ongoing protection as new sensitive data is added to your database.

See the Sensitive Data Discovery guide for more information on this feature.

Parameters

This task type does not have any parameters.

Example

The following shows an example ruleset that will execute only the run_data_discovery task

and no masking tasks. This example usage may be useful when starting a new ruleset from

scratch to determine a starting point for developing your masking rules.

The run_data_discovery may also be included in a ruleset alongside other masking tasks to

provide continuous feedback on

the masking coverage provided by the ruleset.

version: '1.0'

tasks:

- type: run_data_discovery

Schema Discovery

The run_schema_discovery task type inspects the metadata of your database, searching for schemas, tables, and columns

and can flag certain columns which are likely to contain sensitive data. On completion, a report is generated containing

a summary of all identified schemas, tables, columns, and relevant metadata of the data within the columns.

See the Schema Discovery guide for more information on this feature.

Parameters

This task type does not have any parameters.

Example

The following shows an example ruleset that will execute only the run_schema_discovery task

and no masking tasks. This example usage may be useful when starting a new ruleset from

scratch to determine a starting point for developing your masking rules.

version: '1.0'

tasks:

- type: run_schema_discovery

Table masks

Each mask_table task defines masking operations to be performed on a

database table. More detail is provided about these tasks under the Masking

Tables section.

Parameters

Each task with type mask_table is defined by the following parameters:

table(required): The name of the table in the database. The table name can be prefixed with a schema name to reference a table in another schema. If the table or schema name are case-sensitive, you must enclose the name in double and single quotation marks in order to specify the casing of the name. For example,table: '"CaseSensitiveSchema"."CaseSensitiveTable"'key(required): One or more columns that identify each table row. Composite keys may be specified for thekeyparameter. For more details on using composite keys, see Composite keys- For Oracle databases it should always be

ROWID(key: ROWID). DataMasque will implicitly use ROWID when ROWID is not specified. For more details, refer to Query optimisation. - For Microsoft SQL Server and PostgreSQL databases it is recommended to use the primary key, or any other unique

key that is not modified during masking, for better performance. If a non-unique

keyis used then the masked values for all rows with the same value for thekeywill have the same masked values. The key columns must not contain any NULL value. If the key is case sensitive, you may enclose each key value in double and single quotation marks in order to specify the casing of the key. For example,key: '"Customer_ID"' - If the columns specified for the key parameter cannot be used to uniquely identify rows, then the masked values will be the same for rows that have the same key value. Refer to the key and hash columns example in the Notes section for how to avoid producing duplicate masked values.

- For Oracle databases it should always be

rules(required): A list of masking rules (or dictionary mapping arbitrary keys to rules) to apply to the table. Rules are applied sequentially according to the order they are listed. Regular (non-conditional) masking rules are defined by the following attributes:column(required): The name of the column to mask.masks(required): A list of masks (or dictionary mapping arbitrary keys to masks) to apply to the column. Masks are applied sequentially according to the order they are listed, so the output value from each mask is passed to the next mask in the list. Each type of mask has a different set of required attributes, as described for each type in Mask types.hash_columns(optional): A list of columns which will be used as input to the Deterministic masking algorithm for this rule. Ifhash_columnsis provided, all mask types that rely on randomisation become deterministic based on thehash_columnscolumn values. Values in the provided columns can be null. The hash column can be prefixed with a table name to reference a column in another table, and that table name can be prefixed with a schema name to reference a table in another schema. Hash columns can also be specified by a list of dictionaries with the following keys:column_name(required): The name of the column which will be used as input as above.case_transform(optional):upper/lower- Allow for case transforms on the values of the input, for consistent hashed values irrespective of case. This is useful if values are stored with different cases in different tables, allowing for consistent hashing on those values, For example, email addresses could be stored as all lowercase in one table but mixed case in another.json_path(optional): If the column contains JSON data, the path to the value in the JSON data you wish to perform the hash on can be specified here, otherwise the hash will be performed on the entire column.xpath(optional): If the column contains XML data, the Xpath to the value stored in the XML data you wish to perform the hash on can be specified here, otherwise the hash will be performed on the entire column. For more information on thejson_pathplease refer to the JSON documentation. For more information on thexpathplease refer to the XML documentation. For more information on deterministic masking please refer to the Deterministic masking.

workers(optional): The number of parallel processes to use for masking this table (defaults to 1). Each process will operate on a separate batch of rows from the table (batch size is a configurable run option). Increasingworkersmay decrease masking run times, dependent on database performance and the complexity (e.g. number of columns to mask) of the masking task. It is recommended to increase the number of workers if DataMasque connections to your target database spend more time processing queries than waiting for DataMasque (i.e. the "waiting for clients" time approximates DataMasque CPU time), which suggests DataMasque could efficiently use multiple workers to mask other batchs while waiting for database responses. Additionally, it is recommended that the number of parallel processes multiplied by the number of workers assigned to each process does not exceed twice the number of CPUs available on your deployed instance.index_key_columns(optional): Whenindex_key_columnsistrue(the default setting), DataMasque will create an additional index on all key columns if there is no existing index contains all key columns. You may wish to disable the creation of a new index if you have an existing index on some of the key columns that will provide adequate performance.- For Oracle databases, this option has no effect because

ROWIDis always used as the key. - Redshift databases do not support indexes, so this option has no effect for them.

- This option currently has no effect for Microsoft SQL Server (Linked Server) databases.

- For Oracle databases, this option has no effect because

version: '1.0'

tasks:

- type: mask_table

table: users

key:

- user_id

- first_name

rules:

- column: date_of_birth

hash_columns:

- first_name

masks:

- type: from_random_datetime

min: '1980-01-01'

max: '2000-01-01'

Composite keys

A composite key can be specified in following formats:

- A list of columns; for example,

key: ['invoice_id', 'product_id']would be used to indicate a composite key consisting of a combination of two columns,invoice_idandproduct_id. - For Microsoft SQL Server and PostgreSQL, when specifying a composite key, the order of the keys listed must respect the original order as defined in the database.

- A multiline composite key. An example of a multiline composite key is shown below.

key:

- 'invoice_id'

- 'product_id'

Example mask_table ruleset

version: '1.0'

tasks:

- type: mask_table

table: users

key: user_id

rules:

- column: last_name

masks:

- type: from_fixed

value: "redacted last name"

Notes

- Index operations will be performed online (

ONLINE=ON) on SQL Server editions that support this feature.- The following types cannot be used as

keycolumns:

- Microsoft SQL Server

datetimetime(7)datetime2(7)datetimeoffset(7)- PostgreSQL

realdouble precision- While

rulesandmasksshould typically be provided as lists, they can also be specified as dictionaries that map arbitrary keys to rules/masks. For example:... rules: last_name_rule: column: last_name masks: fixed_mask: type: from_fixed value: "redacted last name"

- Specifying

rulesormasksas a dictionary can allow you to override the rule/mask for a specific key when inheriting from a definition.- When masking a table, if a non-unique key is specified for the

mask_tabletask alongsidehash_columns, if the value of thehash_columnsis different for more than one row with same key value, the final masked values will arbitrarily depend on the order that update statements are executed. This can be avoided by including the targetedhash_columnsas part of a composite key for themask_tabletask.

Mask a primary key or unique key

The mask_unique_key task type can be used to mask the values in a primary key or unique key.

Masking of a primary key or unique key has the requirement that all masked values are unique, which

requires the use of this special-purpose task type.

The mask_unique_key task type replaces all non-null rows of the target key with new, unique

values, generated in accordance with a user-specified format. The target primary or unique key

columns and associated foreign key columns are updated with these unique replacement values in a

single operation to maintain referential integrity.

Notes:

- Each

mask_unique_keytask will mask the members of a single primary key or unique key constraint. Multiplemask_unique_keytasks are required to mask multiple unique keys on a single table.- The

mask_unique_keytask can be used on tables with up to 200,000,000 non-null rows.- DataMasque will only cascade to foreign keys that directly reference the target key. DataMasque does not currently support automatic cascading to any foreign keys beyond direct foreign key references.

- When applied to a composite key, replacement values are only generated for rows that contain a complete, non-null key. For any null or partially null rows, all columns of the target key will be set to

NULL.- The

mask_unique_keytask must only be applied to columns which are in-fact unique (i.e. the target key columns have aPRIMARY KEYorUNIQUEconstraint enforced). Unique keys that have multipleNULLrows (e.g. using a filtered unique index in SQL Server) are allowed; such rows will not be modified by this task.- When masking a clustered index on SQL Server, the performance of

mask_unique_keycan be significantly improved by disabling all other indexes and constraints on the target table for the duration of the task. It is recommended to implement this in your ruleset usingrun_sqltasks before and after themask_unique_keytask to disable then re-enable these constraints.- The

mask_unique_keytask cannot modify SQL Server columns created with theIDENTITYproperty, or Oracle / PostgreSQL columns created withGENERATED ALWAYS AS IDENTITY.- Use of

mask_unique_keyfor Amazon Redshift or Microsoft SQL Server (Linked Server) databases is not currently supported in DataMasque.- Due to the random assignment of replacement values, it is possible (though generally rare) that a row may be assigned a masked value that is identical to it's pre-masking value. In these cases, the masking is still effective, as an attacker will not be able to identify which rows' values were replaced with an identical value. However, if you need to guarantee that all masked values are different from their pre-masking values, you should use

minandmaxparameters to ensure the range of possible output values from your format string does not overlap with the range of pre-masking values in your database.

Warning: The

mask_unique_keytask type must not be run in parallel with tasks that operate on any of the following:

- The target

tableof themask_unique_keytask.- Tables containing foreign keys that reference the

target_keycolumns.- Any tables specified in

additional_cascades.

Parameters

Each task with type mask_unique_key is defined by the following parameters:

table(required): The name of the database table that contains the primary key or unique key to be masked.target_key(required): A list of items defining each column that makes up the primary or unique key, and the format in which replacement values will be generated for that column. Composite keys can be masked by including multiple columns and formats in this list. Each item has the following attributes:column(required): The name of the column to be masked.format(optional): The format which will be used to generate replacement values for the column. See Format string syntax for details. Defaults to'{!int}'.

additional_cascades(optional): Use this parameter to propagate masked values to implied foreign key columns. Implied foreign keys are dependencies that exist between tables but not enforced by foreign key constraints hence not defined in the database. A list of implied foreign keys to thetarget_key. Masked values will be cascaded to these columns. See Cascading of masked values for more details on how this works. Eachadditional_cascadesitem has the following attributes:table(required): The name of the table containing the cascade target columns, which have an implicit reference to thetarget_keyof this task. The table name can be prefixed with a schema name to cascade to a table in another schema.columns(required): A list of column dictionaries - each describing the relationship between a column of the target key and a column on the cascade target table. Each column mapping item has the following attributes:source(required): The name of a column in the target key from which masked values will be cascaded to the corresponding target column.target(required): The name of a column on the cascade target table to which masked values from the source column will be cascaded.

batch_size(optional): To avoid excessive memory consumption when masking large tables, DataMasque generates replacement values in batches. This value controls the maximum number of unique values that are generated in a single batch. In general, the default of 50,000 will be acceptable for most use cases.

Note:

- When using

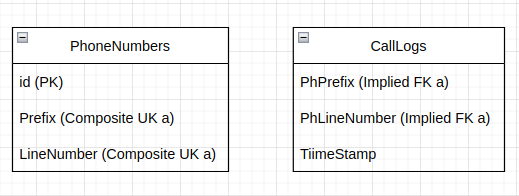

additional cascadesto propagate composite primary or unique key columns to implied foreign key columns, you need to specify all the columns in theadditional cascadesparameter that are corresponding to the referenced primary or unique key columns. Warning! Not specifying all the columns that are corresponding to the referenced primary or unique key columns will cause data propagation to fail from the referenced composite primary or unique key columns to the composite implied foreign keys. In other words, partial cascades that reference a subset of the target key columns will result in data not being propagated to the target table, resulting in inconsistent data between the two tables. For example: A composite unique key in aPhoneNumberstable which consists ofPrefixandLineNumbercolumns which are referenced byPhPrefixandPhLineNumbercolumns in theCalllogstable but without foreign key constraint. Therefore it is an implied foreign key that requires using theadditional_cascadesparameter to propagate the masked unique key values to ensure data integrity across the tables.

A ruleset needs to be written to specify all corresponding implicit foreign key columns in the

additional_cascadesparameter as such that the masked unique keys will be propagated collectively to the foreign keys:version: "1.0" tasks: - type: mask_unique_key table: PhoneNumbers target_key: - column: Prefix. #part of the composite unique key constraint format: "{!int, 1:150, pad}" - column: LineNumber #part of the composite unique key constraint format: "{!int, 50001:100000, pad}" additional_cascades: - table: CallLogs columns: # Need to include both Prefix/PhPrefix and LineNumber/PhLineNumber for data to propagate properly. - source: Prefix #UK a target: PhPrefix - source: LineNumber #UK a target: PhLineNumber

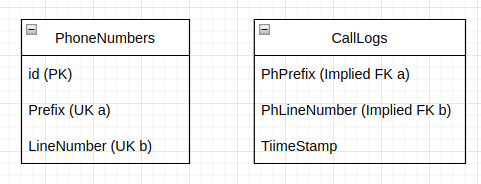

- However, if they are not composite foreign keys but individual foreign keys a ruleset needs to be written to propagate the masked unique keys individually:

version: "1.0" tasks: - type: mask_unique_key table: PhoneNumbers target_key: - column: Prefix. #has its own unique key constraint format: "{!int, 1:150, pad}" - column: LineNumber #has its own unique key constraint format: "{!int, 50001:100000, pad}" additional_cascades: # Need to include both Prefix/PhPrefix and LineNumber/PhLineNumber for data to propagate properly. - table: CallLogs columns: - source: Prefix #UK a target: PhPrefix - table: CallLogs columns: - source: LineNumber #UK b target: PhLineNumber

Example 1

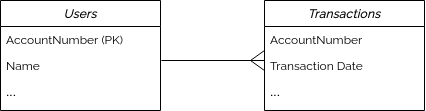

The following example will mask the primary key column AccountNumber of the Users table with unique

replacement values. Another table, Transactions, also has a column named AccountNumber which

has a foreign key relationship to the AccountNumber column of the Users table.

Account numbers will be generated with at least 6 digits; the minimum value being 100,000 and the

maximum value growing as required depending on the number of rows in the table.

In this ruleset below, you only need to specify masking rules for the primary key column, AccountNumber,

to be masked. You do not need to explicitly define the foreign key columns to propagate the replacement values

to in the ruleset. DataMasque will automatically detect primary key and foreign key relationships in the database

and propagate the replacement values to any related foreign key columns; in this case, the new values for

AccountNumber in the Users table are implicitly propagated to the AccountNumber column in the Transactions

table.

version: '1.0'

tasks:

- type: mask_unique_key

table: Users

target_key:

- column: '"AccountNumber"'

format: '{!int,100000:}' # Account numbers will be generated with at least 6 digits

Show result

Users table |

|

|---|---|

| Before | After |

|

|

Transactions table |

|

|---|---|

| Before | After |

|

|

Example 2

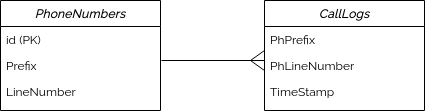

The following example will mask a composite unique key of a PhoneNumbers table. The composite key

consists of the following columns:

Prefix VARCHAR(3): containing a zero-padded integer in the range (1-150). e.g. 001, 002, etc.LineNumber VARCHAR(6): containing a zero-padded integer in the range (50,001-100,000).

Values matching these specific formats can be generated using the following format strings:

'{!int, 1:150, pad}': Generates integers between 1-150 (inclusive), zero-padded to a fixed width of 3 characters.'{!int, 50001:100000, pad}': Generates integers between 50,001-100,000 (inclusive), zero-padded to a fixed width of 6 characters.

Because both of these formats have an upper value bound, we must consider the maximum number of

unique composite values that are available in this space. Multiplying the number of values in the

two ranges (150 * 50,000), we can determine that these two format strings will supply us with

7,500,000 rows of unique composite values. As a result, this task would fail if applied to a table

containing more than 7,500,000 (non-null) rows.

In addition to the PhoneNumbers table, this schema also includes a CallLogs table containing

the columns PhPrefix and PhLineNumber, which are references to the values in the Prefix and

LineNumber columns of the PhoneNumbers table. However, due to specific requirements of this

schema, these references are not defined using a foreign key constraint. Without a foreign key,

DataMasque will not automatically propagate the replacement values generated for the PhoneNumbers

table to the CallLogs table.

In order to ensure the referential integrity of these implicit

references is maintained during masking, this example uses additional_cascades to instruct

DataMasque on how to cascade updated values to these columns. DataMasque will take the values of Prefix

and LineNumber columns of the PhoneNumbers table and propagate these values to the PhPrefix and PhLineNumber

columns of the CallLogs table.

version: '1.0'

tasks:

- type: mask_unique_key

table: PhoneNumbers

target_key:

- column: Prefix

format: "{!int, 1:150, pad}"

- column: LineNumber

format: "{!int, 50001:100000, pad}"

additional_cascades:

- table: CallLogs

columns:

- source: Prefix

target: PhPrefix

- source: LineNumber

target: PhLineNumber

Show result

PhoneNumbers table |

||

|---|---|---|

| Before | After |

|

|

CallLogs table |

||

| Before | After |

|

|

Example 3

Consider a database with two tables and two schemas: Customers in the Accounts schema; and Transactions in the

Sales schema. The following example will mask the primary key column CustomerId of the Accounts.Customers table.

However, any changes made to this CustomerId column must also be reflected on the Customer column of the

Sales.Transactions table. Due to specific requirements of this database, these cross-schema references are not defined

using a foreign key constraint. In order to maintain referential integrity, this example uses additional_cascades to

instruct DataMasque how to cascade updated values to the relevant column present in the other schema.

The Customer ID consists of 3 letters, followed by a hyphen, then a 4-digit number. This will be constructed using a format string to ensure any values generated conform to the required standards.

version: "1.0"

tasks:

- type: mask_unique_key

table: Accounts.Customers

target_key:

- column: CustomerId

format: "{[a-z],3}-{[0-9],4}"

additional_cascades:

- table: Sales.Transactions

columns:

- source: CustomerId

target: Customer

Show result

Customers table |

|

|---|---|

| Before | After |

|

|

Transactions table |

|

|---|---|

| Before | After |

|

|

Format string syntax

The format string syntax used by DataMasque to generate unique replacement values currently supports format strings that are combination of alphanumeric characters and symbols. This can be used to generate key values that combined randomly generated portions combined with fixed formatting to generate a key that matches any format required in your key columns.

Format strings consist of fixed values, as well as variable values that can be declared braces {}.

Values within braces can be provided with a set of characters to use, followed by a length of values. Any

values not declared within braces are fixed values.

Format strings can be constructed using character sets. Character are wrapped in braces and followed by a comma and a number to indicate how many characters in the set are to be generated.

| Character Set | Description |

|---|---|

| [a-z] | Lower case alphabetical characters. |

| [A-Z] | Upper case alphabetical characters. |

| [0-9] | Numerical characters. |

| [aeiou] | Any vowel. Characters can be individually specified without using a range. |

An example format strings is shown below.

format: "{[a-z],2}_{[A-Z],2}-{[a-zA-Z],3}#{[0-9],5}"

In this example, there are four sets of curly braces within the format string, each providing a set of characters followed by a number indicating how many of these characters to generate.

- The first braces specify

{[a-z],2}, which will generate a string of 2 lower case alphabetical characters. - After this, there is an underscore outside the brackets, which means all generated values will have an underscore after the 2 alphabetical characters.

- The second braces specify

{[A-Z],2}, which will generate string of 2 upper case alphabetical characters. - After this second variable, there is a hyphen

-, meaning a hyphen will always be present after the 2 upper case values. - The third braces specify

{[a-zA-Z],3}. which will generate a string of length 3 consisting of both lower case and upper case alphabetical characters. - After this, there is a hash

#, meaning a hash character will always be placed after the third generated string. - The final braces specify

{[0-9],5}, meaning a 5 digit numerical number is placed at the end of the string.

The following values are some example outputs that may be generated using the format string above:

ab_TJ-RaK#10496

pt_oq-TRu#49511

iu_QE-unT#67312

nd_UL-bES#97638

Example

In this example, we wish to mask a series of number plates.

A number plate consists of 3 alphabet characters, followed by 3 numerical digits. We wish to mask the first letter of each number plate with X, followed by 2 random alphabetical characters, followed by a hyphen and a 3 digit number.

A snippet of the table is shown below, where number_plate is a unique key of the table.

car_registration Table

| number_plate | car_owner |

|---|---|

| AAA-111 | Anastasia |

| BBB-222 | Bill |

| CCC-333 | Chris |

| DDD-444 | Judith |

| EEE-444 | Gordon |

In this case, we will use the following string format.

format: "X{[A-Z],2}-{[0-9],3}"

We are generating a fixed value of X, followed by 2 alphabetical characters, as defined by {[A-Z],2}. After this,

there is a hyphen outside of braces, so a static value of a hyphen will always be generated. After this, 3 random

numerical digits are generated.

A ruleset utilising this string format in a mask_unique_key task can be shown below.

version: "1.0"

tasks:

- type: mask_unique_key

table: car_registration

target_key:

- column: number_plate

format: "X{[A-Z],2}-{[0-9],3}"

Show result

| Before | After |

|

|

|---|

Integer string format syntax

The !int operator can be used to generate integers. In its most basic use with no arguments, the format string

{!int} will generate integers from one to infinity. Extra parameters can be added to set the output range or pad the

output.

Range (min:max):

The range defines all possible integer values that may be generated by the integer generator. This

is an optional parameter, defaulting to 1: (min=1, max=unbounded).

min(optional): The minimum value which will be generated (inclusive). Defaults to1.max(optional): The maximum value which will be generated (inclusive). When this value is not specified the maximum value will be unbounded, meaning it will grow depending on the number of values required.

For example:

{!int, 5:}: generate integers from5(inclusive) to infinity.{!int, :100}: generate integers from1to100(inclusive).{!int, 20:80:}: generate integers from20to80(inclusive).

Zero-pad (pad):

pad(optional): When specified, zero-padding will be applied to generated integers - resulting in fixed character width replacement values (e.g. 001, 002, …, 999). The zero-pad width is determined by the width of themaxvalue, and therefore is only a valid option when amaxvalue is specified.

For example:

{!int, :1000, pad}: generate strings in the format0001,0002, etc, up to1000.

Note: Always wrap format strings in either single or double quotes. Leaving format strings unquoted in the ruleset will result in invalid YAML due to the opening

{character, which has reserved usage in YAML. See below for examples of a correctly quoted format string:format: '{!int}' # Single quotation marks format: "{!int, pad}" # or double quotation marks

Hex string format syntax

Integers can be generated and output in a hexadecimal format by using the !hex operator. The range and pad options

apply in the same was as for standard integer generation, however range values are interpreted as hexadecimal rather

than decimal.

For example:

{!hex}: generate hex strings from1to infinity, i.e.1,2, …,a,b, …,ff,100, etc.{!hex, 10:100, pad}: generate hex strings from0x10to0x100(inclusive) with padding, i.e.010,011, …,0fe,0ff,100.

UUID string format syntax

You may choose to generate values in the Universal Unique Identifier (UUID) format by declaring a !uuid format string.

A UUID is a string of 32 hexadecimal digits (0 to 9, a to f), separated by hyphens. The number of characters per hyphen

are 8-4-4-4-12. An example UUID would be 12345678-90ab-cdef-1234-567890abcdef.

In order to generate a UUID as the unique key, simply specify uuid in the format as shown below.

format: "{!uuid}"

You may also specify a prefix within the format string of up to 8 characters. This will ensure that the first characters

in the UUID are always static. For example, specifying format: "{!uuid,aaaa}" will cause the first 4 characters of

every UUID generated by the ruleset to be a.

Alternatives string format syntax

You can have DataMasque select one value from a set of alternatives for each generated value.

Such a segment can be specified by wrapping your set of pipe/|-separated alternatives in parentheses ().

For example, {(EN|FR)}-{!int} can be used to generate an integer prefixed by either EN or FR.

At least two alternatives must be specified.

Note: This should only be used with the

mask_tabletask as it will not satisfy the unique requirement for themask_unique_keytask.

Cascading of masked values

Referential integrity of data references to the target_key of a mask_unique_key task is

maintained by "cascading" the masked replacement values to each reference. DataMasque will perform

this cascade automatically for relationships defined by a database foreign key constraint. Masked

values can also be cascaded to columns that are not members of such a foreign key constraint by

using the additional_cascades feature.

Any rows of a cascade target (child table) which contain values that are not present in the

target_key (on the parent table) will have their cascade target columns set to NULL. This

situation may occur in one of the following cases:

The cascade target is a foreign key that has at some point been disabled, had values updated, then been re-enabled without being checked / validated. i.e.

- For Oracle the constraint was re-enabled using

NOVALIDATE. - For Microsoft SQL Server, the constraint was re-enabled without using

WITH CHECK. - For PostgreSQL, the constraint was dropped and recreated instead of being disabled and re-enabled.

- For Oracle the constraint was re-enabled using

The cascade target is an implicit reference without database constraints.

The behaviour is designed to ensure that no rows are left unmasked on the cascade target.

Important!

- DataMasque will only automatically cascade to foreign keys that are enabled. Foreign keys that are present, but disabled at the time of masking will be excluded.

- For Oracle databases, when defining

additional_cascadesformask_unique_keytasks, all columns of thetarget_keymust be referenced assourcecolumns. Partial cascades that reference a subset of the target key columns are disallowed.- For an example of

additional_cascadesplease refer to the notes under the parameters section of Mask a Primary or Unique key

Build a temporary table

If you need to repeatedly join multiple tables when masking tables,

or you need to perform some custom SQL

transformations to column values, then you may wish to use the

build_temp_table task type to create a temporary table which can then be

accessed via a join during a mask_table task:

Parameters

table_name(required): The name of the temporary table to create in the database. You will need to use this name when referencing this temporary table later (e.g. injoinsand masking rules). The table name can be prefixed with a schema name if the temporary table should be created in a schema other than the user's default schema.sql_select_statement(this ORsql_select_filerequired): A string containing aSELECTstatement to define the contents of the temporary table. To break the statement across multiple lines, you may use multi-line YAML syntax (|-or>-).sql_select_file(this ORsql_select_statementrequired): The name of a user uploaded SQL script file containing aSELECTquery to define the contents of the temporary table. See the Files guide for more information on uploading SQL script files. Use this parameter if you have a complex / long query, or you wish to share the same query between many rulesets.

Example (sql_select_statement)

version: '1.0'

tasks:

- type: build_temp_table

table_name: my_temporary_table

sql_select_statement: >-

SELECT accounts.account_id, address.city

FROM accounts

INNER JOIN address

ON accounts.address_id = address.id

WHERE accounts.country = 'New Zealand';

- type: mask_table

table: my_temporary_table

key: id

rules:

- column: city

masks:

- type: from_file

seed_file: DataMasque_NZ_addresses.csv

seed_column: city

Example (sql_select_file)

version: '1.0'

tasks:

- type: build_temp_table

table_name: my_temporary_table

sql_select_file: create_temp_table.sql

- type: mask_table

table: my_temporary_table

key: id

rules:

- column: city

masks:

- type: from_file

seed_file: DataMasque_NZ_addresses.csv

seed_column: city

Notes:

- DataMasque will create the temporary tables before applying any masks, and delete them after all tables have been masked. DataMasque will also ensure the temporary tables do not already exist in the database (removing existing temporary tables with the same name if needed). The temporary tables you define will only be available for use in joins, and cannot be masked themselves.

- For Microsoft SQL Server databases, temporary table names must begin with the

##characters, as they will be created as 'Global Temporary Tables' so that they are visible to all parallel masking connections. However, in YAML the#character begins an inline comment, so the temporary table name must be wrapped in double or single quotes (e.g.table: '##my_temporary_table').- For Oracle and PostgreSQL databases, 'temporary tables' are created as regular tables so that temporary tables are visible to all parallel masking connections.

- The

build_temp_tabletask type is not currently supported for Microsoft SQL Server (Linked Server) databases.

Run SQL

Use the run_sql task type if you need to:

- Run SQL scripts to prepare the database for masking.

- Clean up after a masking run (e.g. disabling/enabling triggers).

- Run simple update operations.

You can supply SQL for DataMasque to execute either as a script file (see Files guide), or inline in the ruleset:

Parameters

sql(this ORsql_filerequired): An SQL script to be executed. For multi-line scripts, you may use the YAML block style syntax (|-).sql_file(this ORsqlrequired): The name of a user-provided file containing an SQL script to be executed (see Files guide). Use this parameter if you have large blocks of SQL to run, or scripts that you wish to share between many rulesets.

Example Microsoft SQL Server (sql)

Note: This example uses Microsoft SQL Server specific syntax, as master has been specified as the target database.

version: '1.0'

tasks:

- type: run_sql

sql: |-

USE [master];

ALTER DATABASE eCommerce SET RECOVERY SIMPLE WITH NO_WAIT;

USE [eCommerce];

ALTER TABLE [SalesRecords].[Customer] DROP CONSTRAINT [FK_SALESRECORDS_CUSTOMER];

ALTER TABLE [Invoices].[Customer] DROP CONSTRAINT [FK_INVOICES_CUSTOMER];

Example (sql_file)

tasks:

- type: run_sql

sql_file: pre_script_1.sql

Notes:

- The

run_sqltask type executes in autocommit mode, and will exit on the first error encountered.- The

run_sqltask type does not run in dry run mode.- The

run_sqltask type is not currently supported for Microsoft SQL Server (Linked Server) databases.- For PostgreSQL and MySQL connections, the SQL interpreter considers a colon followed by letters or numbers as bound parameters. The colon can be escaped with a

\before it. e.g. Attempting to insert JSON data:INSERT INTO table_name (column_name) VALUES('{"is_real" :true, "key":"value"}'::json)run_sqlwill interprettrueas a bound parameter, to fix this you can escape the bound parameter by adding a backslash(\) before the colon(:) as follows:INSERT INTO table_name (column_name) VALUES('{"is_real" \:true, "key":"value"}'::json)

Notes for Oracle:

- It is recommended to test the execution of your SQL script with Oracle SQLPlus before use in a

run_sqltask.- DataMasque appends "WHENEVER SQLERROR EXIT SQL.SQLCODE" to the beginning of the SQL script, so the

run_sqltask will exit on the first error encountered.- Even if the database raises errors while executing the SQL script, some statements may still have finished executing successfully. It is recommended to check the database on any failed

run_sqltask.run_sqldoes not use the schema specified in the connection configuration, instead it will default to the schema of- the user. If you wish to change schema, specify it in the script with

SET SCHEMA.

Notes for Microsoft SQL Server:

- It is recommended to test the execution of your SQL script with Microsoft

sqlcmdor SQL Server Management Studio before use in arun_sqltask.- Even if the database raises errors while executing the SQL script, some statements may still have finished executing successfully. It is recommended to check the database on any failed

run_sqltask.

Notes for PostgreSQL:

- It is recommended to test the execution of your SQL script with

psqlbefore use in arun_sqltask.- A

run_sqltask will be executed with a simple query cycle, where statements are executed in a single transaction (unless explicit transaction control commands are included to force a different behaviour).

Notes for Redshift:

- Using multiple SQL statements in a single

run_sqltask type is not supported currently.

Notes for MySQL:

- It is recommended to test the execution of your SQL script with MySQL shell before use in a

run_sqltask.- Executing

run_sqltasks with more than one statement may not raise errors upon failure. If the first statement executes correctly but subsequent statements fail, errors may not be raised.

Truncate a table

Use the truncate_table task type to specify tables to be truncated by

DataMasque. All rows will be deleted, but the table structure will be left in

place.

Parameters

table(required): The name of the table to truncate. The table name can be prefixed with a schema name to reference a table in another schema.

Example

tasks:

- type: truncate_table

table: history_table

...

Notes:

- The

truncate_tabletask type does not run in dry run mode.- The

truncate_tabletask type is not currently supported for Microsoft SQL Server (Linked Server) databases.

Parallel Tasks

Using the parallel task type, you can specify a block of tasks to be executed

in parallel, spread across as many workers as are available.

Each parallel task distributes to a maximum of 10 sub-tasks. It is recommended to begin testing parallelisation with at most 4 tasks in parallel, then increase parallelisation if the database has more capacity.

Parallel tasks can be nested inside other serial/parallel tasks.

Parameters

tasks(required): A set of tasks to perform in parallel.

Example

tasks:

- type: parallel

tasks:

- type: mask_table

table: employees

key: id

rules:

- column: 'name'

masks:

- type: from_fixed

value: 'REDACTED'

- type: mask_table

table: customers

key: id

rules:

- column: 'address'

masks:

- type: from_fixed

value: 'REDACTED'

...

Warning: You should not mask the same table in multiple tasks (including

mask_table,run_sqltasks) in parallel, as this could result in data being incorrectly masked.

Serial Tasks

Although tasks are performed serially in the order they are listed in the ruleset by default, you can specify a block of tasks to be performed in serial within a parallel block. This is useful when a subset of parallelisable tasks have dependencies that mean they must be executed in sequence.

Serial tasks can be nested inside other serial/parallel tasks.

Parameters

tasks(required): A set of tasks to perform in series.

Example

tasks:

- type: parallel

tasks:

- type: serial

tasks:

- type: run_sql

sql_file: pre_employees_script.sql

- type: mask_table

table: 'employees'

key: id

rules:

- column: 'name'

masks:

- type: from_fixed

value: 'REDACTED'

- type: mask_table

table: 'customers'

key: id

rules:

- column: 'address'

masks:

- type: from_fixed

value: 'REDACTED'

...

Masking files

Each mask_file or mask_tabular_file task specifies the masking rules to apply to each file in the base directory and/or any subdirectories.

As well as any files/directories intended to be skipped or included, and any conditionals required to define which data to mask in the masking process.

Masking rules and masks are applied sequentially in the order they are listed. When multiple masks are combined in sequence, the output value

from each mask is passed as the input to the next mask in the sequence.

Note If the source connection and destination connection are of the same type and have the same base directory, the files will be overwritten. The list of files is read at the start of the masking run, so new files added during the masking run will not be masked and will not be present in the destination.

File task types

A ruleset consists of a list of tasks to be performed in sequence on the target data source after the file is masked, it is then written to the selected data destination.

Object file masks

Each mask_file task defines masking operations to be performed on a file or set of files. More detail is provided

about these tasks under the Masking files section.

Parameters

Each task with type mask_file is defined by the following parameters:

rules(required): A list of masking rules (or dictionary mapping arbitrary keys to rules) to apply to the table. Rules are applied sequentially according to the order they are listed. Regular (non-conditional) masking rules are defined by the following attributes:masks(required): A list of masks (or dictionary mapping arbitrary keys to masks) to apply to the file. Masks are applied sequentially according to the order they are listed, so the output value from each mask is passed to the next mask in the list. Each type of mask has a different set of required attributes, as described for each type in Mask types.

recurse(optional): A boolean value, when set to true any folders in the Data Source will be recursed into and the files contained will also be masked. Defaults tofalse.workers(optional): The number of parallel workers to use for this masking task. Defaults to 1.skip(optional): Specifies file/directory to leave alone and not to mask.regex(optional): Specifies a regex which upon matching to file names will leave alone.glob(optional): Specifies a glob which upon matching to folder names will leave alone.

include(optional): Specifies file/directory to include.regex(optional): Specifies a regex which upon matching to file names will them include in the masking run.glob(optional): Specifies a glob which upon matching to directory names will them include in the masking run.

encoding(optional): The encoding to use when reading and writing files. Defaults toUTF-8. Refer to Python Standard Encodings for a list of supported encodings.

For more information about the ordering of

skipandincludeplease refer to Include/Skip.

Note:

regex/globwill match to the path from the base directory specified in the source connection, consider adding.*(regex) or*(glob) to the beginning of the expression for matching. For example: If the structure is /path1/path2/target_file.json, if the base directory is path1/ andrecurse: truein the ruleset, theregex/globwill try to match path2/target_file.json. When including a path by specifying aglobsuch as target_path/* therecurseoption needs to be set totrueotherwise the included path won't be entered and the files it contains will not be masked.

Supported file types

In general, mask_file has been designed to mask JSON or XML files. Each file is loaded as a string and passed to the

masks. Therefore, to mask a JSON file, a json mask would be implemented, like the following example:

version: "1.0"

tasks:

- type: mask_file

recurse: true

skip:

- regex: '^(.*)2.json'

- glob: "input/*"

include:

- glob: "other_inputs/*.json"

rules:

- masks:

- type: json

transforms:

- path: ['name']

masks:

- type: from_fixed

value: REDACTED

This would replace the root name attribute in the JSON with the text REDACTED.

Similarly, for XML files, use an xml mask:

version: "1.0"

tasks:

- type: mask_file

recurse: true

skip:

- regex: '^(.*)2.xml'

- glob: "input/*"

include:

- glob: "other_inputs/*.xml"

rules:

- masks:

- type: xml

transforms:

- path: 'User/Name'

node_transforms:

- type: text

masks:

- type: from_fixed

value: REDACTED

This would replace the content of the node(s) at User/Name with the text REDACTED.

To mask other types of files, basic redaction is possible. For example, to replace the contents of every txt file with

the text REDACTED:

version: "1.0"

tasks:

- type: mask_file

recurse: true

include:

- glob: "*.txt"

rules:

- masks:

- type: from_fixed

value: REDACTED

It is possible to use any mask that accepts text input (or no input), although their effectiveness will depend on the size and content of the input file.

Note also, that files that have not been processed will not be copied from the source to the destination. That is,

DataMasque will either load a file (based on skip/include rules), mask it, then copy it to the destination, or

it will ignore the file. Unmasked files will not be copied to the destination.

Tabular file masks

Each mask_tabular_file task defines masking operations to be performed on a file or set of files (CSV, Parquet or

fixed-width columns). More detail is provided about these tasks under the Masking files section.

Parameters

Each task with type mask_tabular_file is defined by the following parameters:

rules(required): A list of masking rules (or dictionary mapping arbitrary keys to rules) to apply to the table. Rules are applied sequentially according to the order they are listed. Regular (non-conditional) masking rules are defined by the following attributes:column(required): A column within the tabular file intended for masking. This is the header row of the column (for CSVs) or name of column (for parquet files).masks(required): A list of masks (or dictionary mapping arbitrary keys to masks) to apply to the column. Masks are applied sequentially according to the order they are listed, so the output value from each mask is passed to the next mask in the list. Each type of mask has a different set of required attributes, as described for each type in Mask types.

recurse(optional): A boolean value, when set to true any folders in the Data Source will be recursed into and the files contained will also be masked. Defaults tofalse.workers(optional): The number of parallel workers to use for this masking task. Defaults to 1.skip(optional): Specifies file/directory to leave alone and not to mask.regex(optional): Specifies a regex which upon matching to file names will leave alone.glob(optional): Specifies a glob which upon matching to directory names will leave alone.

include(optional): Specifies file/directory to include.regex(optional): Specifies a regex which upon matching to file names will them include in the masking run.glob(optional): Specifies a glob which upon matching to directory names will them include in the masking run.

encoding(optional): The encoding to use when reading and writing files. Defaults toUTF-8. Refer to Python Standard Encodings for a list of supported encodings.fixed_width_extension(optional): The file extension that fixed-width files have. Not required if no fixed-width files are to be masked. Should not include a leading.(e.g. specifytxtnot.txt).fixed_width_columns_indexes(optional): An array of two-element arrays of start and end indexes of the fixed width columns. Required iffixed_width_extensionis specified.fixed_width_column_names(optional): An array of string defining the names of the fixed-width columns and used to refer to them in masking rules. Required iffixed_width_extensionis specified, and must match the length offixed_width_columns_indexes.fixed_width_too_wide_action(optional): The action to take if masked data exceeds the width of the column, can be eithertruncateto truncate the value to fit in the column orerrorto raise an error and stop the masking run. Defaults totruncate.fixed_width_line_ending(optional): The line ending to use when writing out the fixed width data. Will attempt to be detected from the input file, otherwise defaults to\n.

For more information about:

- How tabular file types are detected, see Tabular File Type Detection.

- Parameters for fixed-width file masking, see Fixed Width File Masking Parameters.

- The ordering of

skipandinclude, please refer to Include/Skip. - Table

joins are not supported in tabular file masking.

Note:

regex/globwill match to the path from the base directory specified in the source connection, consider adding.*(regex) or*(glob) to the beginning of the expression for matching. For example: If the structure is /path1/path2/target_file.json, if the base directory is path1/ andrecurse: truein the ruleset, theregex/globwill try to match path2/target_file.json. When including a path by specifying aglobsuch as target_path/* therecurseoption needs to be set totrueotherwise the included path won't be entered and the files it contains will not be masked.

version: "1.0"

tasks:

- type: mask_tabular_file

recurse: true

skip:

- regex: '^(.*)2.json'

- glob: "input/*"

include:

- glob: "other_inputs/*"

rules:

- column: name

masks:

- type: from_fixed

value: REDACTED

Tabular File Type Detection

DataMasque uses file extensions to determine how tabular files are loaded for masking. The detection is not

case-sensitive. Files with the extension csv are treated as CSV files. Files with extension parquet are treated as

Apache Parquet files.

Note CSV files require header columns for tablular masking as the header columns are used as column names during masking. CSV and fixed-width files are all string based files, therefore values should be cast to other types if being used with masks that require specific types (e.g.

numeric_bucket). To do this use atypecastmask, for more information please refer to Typecast

DataMasque will only attempt to load fixed width files if fixed_width_extension is specified, and will treat any files

with this extension as fixed-width. See also Fixed Width File Masking Parameters.

Once files are loaded they are all masked in the same way, that is, rules are executed and applied on a per row/column basis regardless of the original source type. Data will be written back out in the same format as it was read.

Fixed Width File Masking Parameters

Masking of fixed-width files is only attempted if fixed_width_extension is specified. If fixed_width_extension is

present in the ruleset without fixed_width_columns_indexes and fixed_width_column_names, then an error will be

raised. However, it is valid to have fixed_width_columns_indexes and fixed_width_column_names missing if

fixed_width_extension is also absent.

If fixed_width_extension is set, then DataMasque will treat any files with that extension as fixed-width and load them

based on the other fixed-width options. To assist in explaining the rules, consider an example file called users.txt.

This is the content:

Adam 2010-01-01 AAA-1111

Brenda 2010-01-01 EEE-5555

Charlie 2010-02-02 GGG-7777

It has 3 columns, the first containing a name, which is from index 0 to 8. The second column contains a date and

spans from 8 to 19. The final column contains a transaction ID and spans from index 19 to 27.

Note these indexes are specified to be contiguous as some fixed-width formats require contiguous columns, therefore a

trailing space is included in the first and second columns. DataMasque automatically strips leading and trailing spaces

when the data is read. Contiguous columns are not required though, so the same result could be achieved with indexes

(0, 7), (8, 18), (19, 27). When non-contiguous columns are specified DataMasque inserts spaces in between columns.

Since fixed-width files do not have column headers, the ruleset must also specify these. They can be any arbitrary

valid column identifier (i.e. alphanumeric string without special characters) and are used to identify the columns in

the masking rules. In this case they will be named name, date and transaction_id.

Considering these rules will yield a ruleset like this:

version: "1.0"

tasks:

- type: mask_tabular_file

recurse: true

fixed_width_extension: txt

fixed_width_columns_indexes:

- [0, 8]

- [8, 19]

- [19, 27]

fixed_width_column_names:

- name

- date

- transaction_id

rules:

- column: name

masks:

- type: from_file

seed_file: DataMasque_firstNames_mixed.csv

seed_column: firstname-mixed

- column: date

masks:

- type: from_random_date

min: '1950-01-01'

max: '2000-12-31'

- type: typecast

typecast_as: string

date_format: '%Y-%m-%d'

- column: transaction_id

masks:

- type: imitate

Note that when this ruleset is executed, DataMasque will still load any CSVs or Parquet files it encounters, however it

will use the standard loaders instead of applying the fixed width rules. In this case fixed-width rules will only be

used for txt files.

This can be useful if CSV or Parquet files exist in the source with the same columns and need to be masked in the

same manner. If these files exist, and they shouldn't be masked, then skip rules should be added to skip them.

Include/Skip

When specifying which files/directories to include or skip for a mask_file/mask_tabular_file task, the

ordering of which list is checked first needs to be considered. The include items are checked followed by the

skip items, so if an item is present in both include and skip lists, that item will be included in the masking task.

Masking Tables

Each mask_table task specifies the masking rules to apply to a

database table, as well as any required joins and any conditionals needed to define which rows

should be masked. Masking rules and masks are applied sequentially in the order

they are listed. When multiple masks are combined in sequence, the output value

from each mask is passed as the input to the next mask in the sequence.

Note: While

mask_tableis suitable for most generic masking requirements, it is not capable of masking unique keys or primary keys. Masking of such values requires the use of the special-purposemask_unique_keytask.

Selecting data to mask

DataMasque provides some advanced features for selecting additional data from the database for use in your masking rules.

Joining tables

When masking a table, you can specify a list of joins (or dictionary mapping keys to joins) that will join the rows of a target table to rows from one or more additional tables, providing you with the additional joined values to use in your masking rules.

Parameters

target_table(required): The name of the new table you wish to join into the masking data. The target table can be prefixed with a schema name to reference a table in another schema.target_key(required): The key ontarget_tableto use when performing the join. This can be specified as a single column name or a list of column names.source_table(required): The name of the table you wish join thetarget_tablewith. This could be the table being masked, or another table earlier in the list ofjoins(allowing you to perform multi-step joins). The source table can be prefixed with a schema name to reference a table in another schema.source_key(required): The key onsource_tableto use when performing the join. This can be specified as a single column name or a list of column names.

Example

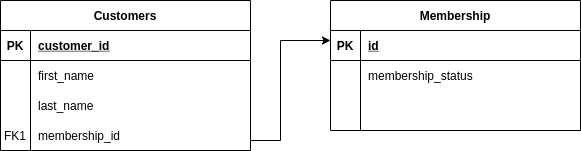

In this example, we have two tables: Customers and Membership. We would like the mask the first_name and

last_name columns of the Customers table, but only if the user's membership status is Active.

The Customers contains data on customers, including their name and membership ID. The Membership table

contains the status of the membership of each Customer: either Active or Inactive. The membership_ip column

of the Users has a foreign key relation with the id column of the Membership table.

Customers Table

| customer_id | first_name | last_name | membership_id |

|---|---|---|---|

| 1 | Anastasia | Rose | 10001 |

| 2 | Bill | Jones | 10002 |

| 3 | Chris | Yang | 10003 |

| 4 | Judith | Taylor | 10004 |

| 5 | Gordon | Smith | 10005 |

Membership Table

| id | membership_status |

|---|---|

| 10000 | Active |

| 10001 | Active |

| 10002 | Inactive |

| 10003 | Active |

| 10004 | Inactive |

In order to access the membership_status column of the of the Membership table, we need to define a join in our

ruleset from the Customers table to the Membership table.

version: "1.0"

tasks:

- type: mask_table

table: Customers

key: customer_id

joins:

- target_table: Membership

target_key: id

source_table: Customers

source_key: membership_id

rules:

- if:

- column: '"Membership".membership_status'

equals: Active

rules:

- column: first_name

masks:

- type: from_file

seed_file: DataMasque_firstNames_mixed.csv

seed_column: firstname-mixed

- column: last_name

masks:

- type: from_file

seed_file: DataMasque_lastNames.csv

seed_column: lastnames

After performing the join, this will allow us to reference the membership_status column of the Membership

table in our ruleset. In this example, we can reference the column with Membership.membership_status. Using

this column, we can use Conditional Masking to only mask the rows of Customers where the

status of the membership is 'Active'.

Note: To reference a column in a joined table, the table name of joined table must be added as a prefix to the column name**

The example below utilises the from_file mask type detailed here to select a random

first name from the DataMasque_firstNames-mixed.csv and a random last name from the

DataMasque_lastNames.csv files that can be found on our Supplementary Files user guide. It will

first check if the membership_status for the customer is 'Active', and if so, masks the two name columns: otherwise,

these columns are left unmasked.

version: "1.0"

tasks:

- type: mask_table

table: Customers

key: customer_id

joins:

- target_table: Membership

target_key: id

source_table: Customers

source_key: membership_id

rules:

- if:

- column: '"Membership".membership_status'

equals: Active

rules:

- column: first_name

masks:

- type: from_file

seed_file: DataMasque_firstNames_mixed.csv

seed_column: firstname-mixed

- column: last_name

masks:

- type: from_file

seed_file: DataMasque_lastNames.csv

seed_column: lastnames

This example will produce the following results in the Customers table. The customers with customer_id 3 and 5

are not masked, as the status of their membership is 'Inactive' in the joined Membership table.

| customer_id | first_name | last_name | membership_id |

|---|---|---|---|

| 1 | Tia | Pallin | 10001 |

| 2 | Nikau | Koller | 10002 |

| 3 | Chris | Yang | 10003 |

| 4 | Anika | Thom | 10004 |

| 5 | Gordon | Smith | 10005 |

Note:

For Microsoft SQL Server (Linked Server),

joinsare not currently supported.For Microsoft SQL Server, when using temporary table, the name of the temporary table must be wrapped in quotation marks, as the

#symbol in the YAML editor denotes the beginning of a comment (e.g.target_table: '##my_temporary_table'or'##my_temporary_table.column').To reference a temporary table column (e.g. the

table_filter_columnparameter of thefrom_filemask type or as a part ofhash_columns) you must prefix the column name with its table name (e.g.table.column).Any column name specified without a table prefix is assumed to belong to the table being masked (as specified by the

tableparameter for the task). You cannot specify tables that belong to other schemas.

Conditional masking

You may wish to only apply masks to rows or values that meet some conditions. DataMasque has three different methods for conditionally applying masks to meet different use cases:

| Use case | Mechanism |

|---|---|

| I want to restrict which rows are fetched for masking from the database table. | Where |

| I want to apply certain masking rules to only a subset of rows. | If |

| I want to skip applying masks to certain column values. | Skip |

Warning: Use of the conditional masking features 'where', 'skip', or 'if/else', may mean your masking rules are not applied to some database rows or values. It is recommended to verify the resulting output satisfies your masking requirements.

Where - restricting database fetches

To restrict which rows are fetched for masking from a database table,

you can specify a where clause for a masked_table:

version: "1.0"

tasks:

- type: mask_table

table: users

key: id

where: >-

"users"."role" <> 'administrator'

rules:

...

The where clause can refer to any columns in the masked table or joined

tables. All columns must be referenced using their table-qualified name (e.g.

Users.FirstName). Ensure to use appropriate quoting as required. For example,

if the identifier uses a reserved word, starts with an illegal character, or is

a case-sensitive identifier.

Important!

- Any rows excluded by the

whereclause will not be masked.- The SQL you provide for the

whereclause will not be validated before execution, please take care when constructing your SQL.- The SQL you provide for the

whereclause should not end in a semicolon, as this will cause a masking error.- Any string in the where clause variables must be quoted in single quotation marks.

- Joined tables cannot be referenced in the

whereclause currently.

Note for Amazon Redshift:

- Use of

whereclause for Amazon Redshift is not yet supported in DataMasque. This is in our roadmap and will be included in future releases.

If - conditional rules

You can choose to apply certain masking rules to only a subset of rows

within a table, while still allowing other masks to be applied to those

rows. This can be achieved through the use of if-conditions in rules

lists.

Example

In the following example, the last_name of all users will be replaced with

'Smith', but the user's gender will determine the mask applied to their

first_name:

version: "1.0"

tasks:

- type: mask_table

table: users

key: id

rules:

- column: last_name

masks:

- type: from_fixed

value: 'Smith'

- if:

- column: gender

equals: 'female'

rules:

- column: first_name

masks:

- type: from_fixed

value: 'Alice'

else_rules:

- if:

- column: gender

equals: 'male'

rules:

- column: first_name

masks:

- type: from_fixed

value: 'Bob'

else_rules:

- column: first_name

masks:

- type: from_fixed

value: 'Chris'

Parameters:

if(required): A list of conditions (see below) that must all evaluate astruefor the nested list of rules to be applied to a row.rules(required): A nested list of masking rules/nested-if-conditions (or dictionary mapping labels to rules) that will only be applied to rows that meet the conditions defined underif.else_rules(optional): A nested list of masking rules/nested-if-conditions (or dictionary mapping labels to rules) that will only be applied to rows that do NOT meet the conditions defined underif.

A condition under if can contain the following attributes:

column(required): The database column to check this condition against. The column name can be prefixed with a table name to reference a column in another table, and that table name can be prefixed with a schema name to reference a table in another schema.equals(optional): If specified, the condition will only evaluate astrueif the column value exactly equals the specified value. Data types are also checked (i.e.100is not equal to"100").matches(optional): If specified, the condition will only evaluate astrueif the string of the column value matches the specified regular expression. For more details on how to use regular expressions, see Common regular expression patterns.less_than(optional): If specified, the condition will only evaluate astrueif the column value is a number or date/time and is less than the given value.less_than_or_equal(optional): If specified, the condition will only evaluate astrueif the column value is a number or date/time and is less than or equal to the given value.greater_than(optional): If specified, the condition will only evaluate astrueif the column value is a number or date/time and is greater than the given value.greater_than_or_equal(optional): If specified, the condition will only evaluate astrueif the column value is a number or date/time and is greater than or equal to the given value.

Conditions can also be grouped with the logical operators or, not,

and and:

version: "1.0"

tasks:

- type: mask_table

table: users

key: user_id

rules:

- if:

- and:

- not:

- column: username

matches: "customer_.\w+"

- or:

- column: admin

equals: true

- column: role

equals: "admin"

rules:

- column: username

masks:

- type: from_fixed

value: "Bob"

Note: When using an

ifconditional in rulesets, final row counts will reflect the number of rows processed rather than the number of rows masked. This is due to the rows being filtered on the application side and so all rows fetched will be processed and added to the row count. Alternativelywhereconditionals can be used in the ruleset which will provide an accurate row count of masked rows.

Skip - not masking specific values

A common use-case is to not apply masks to certain values, e.g. to leave

NULL values or empty strings unchanged. You can choose to not mask

certain values in a column by specifying a number of values to skip:

version: "1.0"

tasks:

- type: mask_table

table: users

key: user_id

rules:

- column: username

skip:

- null

- ""

- matches: "admin_.\w+"

masks:

- type: from_fixed

value: "Bob"

Any column values that are exactly equal to any of the

string/numeric/null values in the skip list will not be masked (data

types are also checked, i.e. 100 is not equal to "100").

Additionally, string column values matching a regular expression can be

skipped by specifying the skip value as matches: "my_regex".

For more details on how to use regular expressions,

see Common regular expression patterns.

Mask types

Masks are the basic 'building-block' algorithms provided by DataMasque for generating and manipulating column values. Multiple masks can be combined in a list to create a pipeline of transformations on the data, or combined using combinator masks to build up more complex output values.

Parameters

Mask algorithms are defined by their type parameter - this parameter is common

to (and required by) all masks:

type(required) determines the type of mask, and therefore what other parameters can be specified.

Note: Masks operate by either manipulating the original column value, or by generating an entirely new value that replaces the original value. The latter can be referred to as a 'source' mask, as the mask is a source of new values. Such 'source' masks are indicated by the

from_prefix on the masktype.

Available mask types

Generic masks

Fixed value (from_fixed)

A simple mask that replaces all column values with the same fixed value.

Parameters

value(required): The value to replace all column values with. Can be any data type, but should match that of the column being masked. This value can be enclosed in quotation marks, which will convert the value a string, or entered without quotation marks.

Example