Sensitive Data Discovery

- Overview

- Methodology

- JSON Sensitive Data Discovery

- Reporting and notifications

- Keywords configuration

- Appendix: Built-in data discovery keywords

Overview

DataMasque can be configured to automatically discover sensitive data in your databases during

masking. When a masking ruleset contains the special purpose

run_data_discovery task type, DataMasque

will inspect the database metadata and generate a discovery report

for each masking run. Additionally, each user will receive

email notifications of any new, unmasked sensitive data

that has been discovered by DataMasque, providing ongoing protection against new sensitive data

being added to your schemas over time.

Note: The schema discovery feature does not currently support Amazon DynamoDB or Microsoft SQL Server (Linked Server) databases.

Methodology

To perform sensitive data discovery, DataMasque uses regular expressions (regex) to scan the metadata of the target database. When a database column is identified as sensitive, DataMasque will compare it with the masking rules specified in the ruleset to determine the masking coverage for the column.

DataMasque comes with over 90 built-in keywords to help discover various types of sensitive data (account numbers, addresses, etc.) in your database. These built-in keywords are global keywords, used for sensitive data discovery by default and for schema discovery when enabled. Each pattern is classified into one or more categories. The included data classification categories are:

- Personally Identifiable Information (PII)

- Personal Health Information (PHI)

- Payment Card Information (PCI)

JSON Sensitive Data Discovery

Sensitive information contained within JSON data can be discovered through the JSON Sensitive Data Discovery feature available through the YAML Ruleset Editor

Here you can enter JSON data into the text field and run the discovery feature to identify the lowest level sensitive keys which could contain sensitive information. The sensitive items are evaluated using the built-in data discovery keywords.

Once finished it will return an automatically generated rule to mask the sensitive key's values contained within the specified JSON data, this rule can then be copied or inserted into the ruleset on the YAML Editor.

Example

This example will show the benefit of generating the rule through the JSON Mask Generator. Suppose a column contains the following JSON data:

{

"customers": [

{

"primary": {

"name": "Foo",

"credit card": 123456789

},

"secondary":

{

"name": "Bar",

"credit card":987654321

}

}

]

}

This data can be entered into the JSON Data field of the JSON Mask Generator and a rule will be created to mask the sensitive keys (name and credit card) as shown below. This can then be copied into the ruleset under the relevant task.

type: json

transforms:

- path:

- customers

- '*'

- primary

- name

masks:

- type: from_fixed

value: redacted

on_null: skip

on_missing: skip

force_consistency: false

- path:

- customers

- '*'

- primary

- credit card

masks:

- type: chain

masks:

- type: credit_card

validate_luhn: true

pan_format: false

preserve_prefix: false

- type: take_substring

start_index: 0

end_index: 9

on_null: skip

on_missing: skip

force_consistency: false

- path:

- customers

- '*'

- secondary

- name

masks:

- type: from_fixed

value: redacted

on_null: skip

on_missing: skip

force_consistency: false

- path:

- customers

- '*'

- secondary

- credit card

masks:

- type: chain

masks:

- type: credit_card

validate_luhn: true

pan_format: false

preserve_prefix: false

- type: take_substring

start_index: 0

end_index: 9

on_null: skip

on_missing: skip

force_consistency: false

Reporting and notifications

Per-run data discovery report

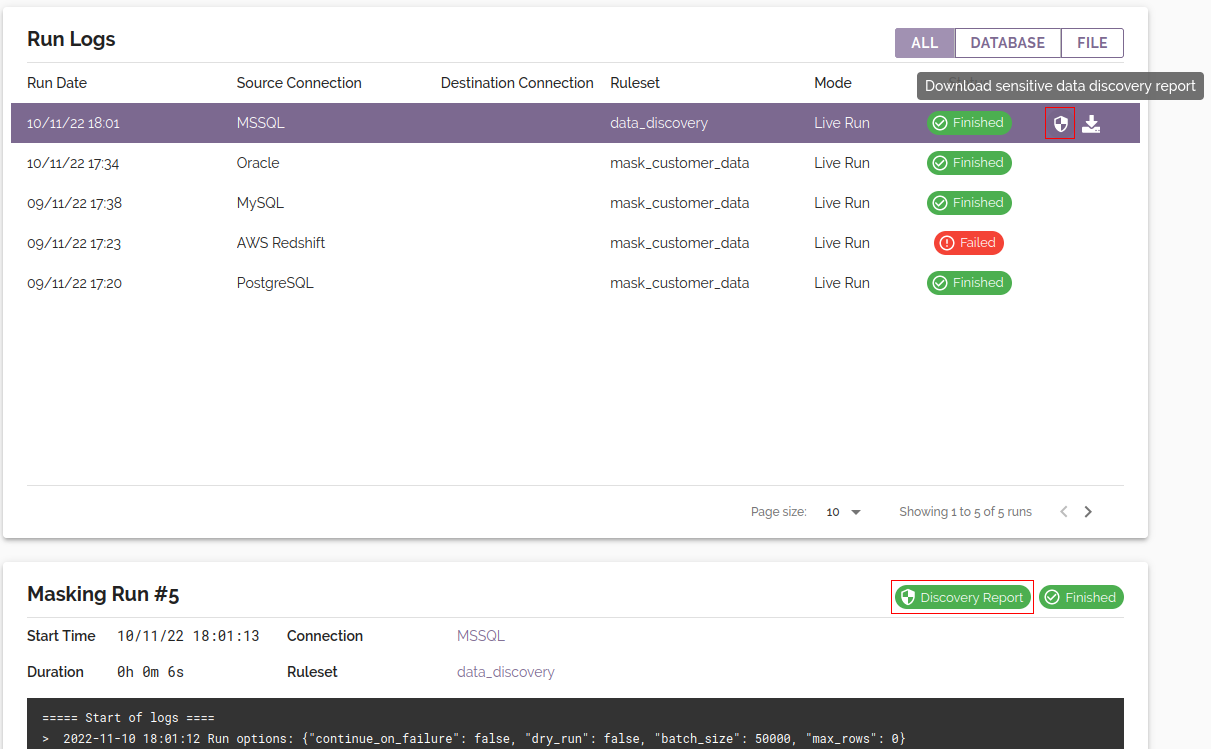

After a run_data_discovery task has

completed, the corresponding data discovery report can be downloaded alongside the

run logs of the masking run:

The sensitive data discovery report will be downloaded in CSV format and may be opened in a text editor or spreadsheet viewer such as Microsoft Excel. The report contains information to assist you in discovering and masking the sensitive data in your database. Every column that has been identified as potentially containing sensitive data is included in the report, along with a classification of the data and an indication of whether the masking ruleset contains a rule targeting the matched column.

The CSV report contains the following columns:

| Table schema | The schema of the table containing a sensitive data match. |

| Table name | The name of the table containing a sensitive data match. |

| Column name | The name of the column which has matched against a commonly used sensitive data identifier. |

| Reason for flag | Description of pattern which caused the column to be flagged for sensitive data. |

| Data classifications | A comma-separated list of classifications for the flagged sensitive data. Possible classifications include PII (Personally Identifiable Information), PHI (Personal Health Information), and PCI (Payment Card Information). |

| Covered by ruleset | A boolean value (True/False) indicating whether the masking ruleset contains a rule to target the identified column. |

Note for Oracle: Sensitive data discovery reports will only cover the tables owned by the user or schema as defined in Connection. Schema will take precedence over user.

Note for Microsoft SQL Server: Sensitive data discovery reports will only cover the tables owned by the user's default schema.

Note for PostgreSQL: Sensitive data discovery reports will only cover the visible tables in the current user search path.

A table is said to be visible if its containing schema is in the search path and no table of the same name appears earlier in the search path.

Notification of new sensitive data

DataMasque users will receive email notifications1 of newly detected, unmasked sensitive data in your databases. This feature provides ongoing protection against new sensitive data making its way into your databases over time.

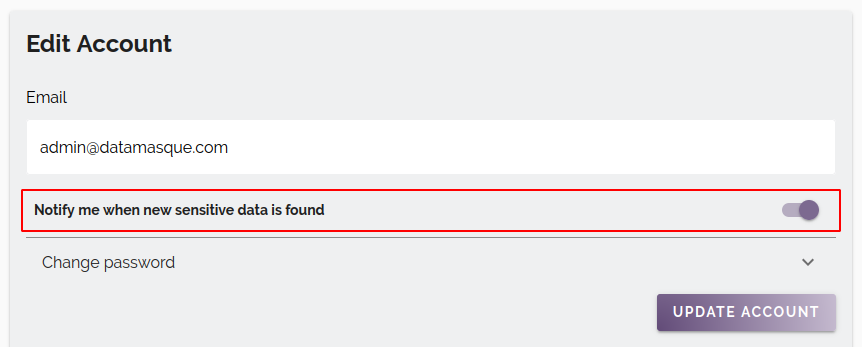

Disabling notifications

Each user can opt out of receiving email updates by navigating to the My Account page and disabling the Notify me when sensitive data is found option of the Edit Account form:

When notifications are sent

Each user that has enables this option will receive daily notification emails when new, unmasked sensitive data is detected on any Connection that has been masked in the previous 24 hours. If there is nothing to report, no notifications will be sent.

Notifications will be sent, given that in the previous 24 hours a data discovery task has been run:

- On a new Connection that contains unmasked sensitive data.

- As part of a ruleset in which a masking rule that was previously protecting sensitive data has been removed.

- On an existing Connection which has had one or more columns containing sensitive data added to the database.

Notes:

- New sensitive data is only detected during masking runs that include a

run_data_discoverytask. Include this task in all masking runs to receive ongoing protection.- This feature requires that SMTP has been configured, allowing DataMasque to send outbound email.

Keywords configuration

DataMasque can be configured with additional custom keywords and ignored keywords to facilitate sensitive data discovery. Both custom keywords and ignored keywords are case-insensitive. These can be configured from the Settings page.

Global Custom Data Classification Keywords

Column names are matched to Global Custom Data Classification keywords in addition to the built-in data discovery keywords. Two formats are supported for custom keywords:

- Keyword format:

- The matching behaviour of the keyword will depend on the number of period-separated segments:

- If no period is present, the keyword will be compared to the column name.

- If a single period is present, the keyword will be compared to

the schema and table name:

schema.table. - If two periods are present, the keyword will be compared to the

schema, table, and column name:

schema.table.column. - Literal periods (

.) and backslashes (\) in names can be escaped with a preceding backslash. - The keyword will be matched case-insensitively against the data-dictionary representations of schema/table/column names.

- The keyword will still match if the name contains additional characters preceding/following a substring that matches the corresponding segment of the keyword.

- Spaces in a keyword will match space, underscore, and hyphen

delimiter characters, and will also match in the absence of such a

delimiter character. For example, the space-separated keyword

credit card numberwould match columns such ascredit card number,creditcardnumber,creditcard_number, andcredit-card number. - The

*wildcard is also supported, for example you can discover all columns in a specific table by specifyingschema_name.table_name.* - Only alphanumeric characters, spaces, underscores, hyphens, periods, asterisk wildcards, and escaping backslashes are allowed.

- Regex format:

- A keyword prefixed with

regex:will be treated as a regular expression over a fullschema_name.table_name.column_namestring. - The regex will be matched case-insensitively against the data-dictionary representations of schema/table/column names.

- Any backslashes or periods in schema/table/column data-dictionary names will be prefixed by a backslash in the string to be matched by the regex.

- For more details on regular expressions, see: Common regular expression patterns.

- A keyword prefixed with

Column names that match the Global Custom Data Classification keywords will be reported and tagged with the data classification "Custom" in Sensitive Data Discovery reports.

Global Ignored keywords

Global Ignored keywords will only ignore exact matches of a column name, which allows you to exclude specific column names from Sensitive Data Discovery reports. Two formats are supported:

- Keyword format:

- The matching behaviour of the keyword will depend on the number of period-separated segments:

- If no period is present, the keyword will be compared to the column name.

- If a single period is present, the keyword will be compared to

the schema and table name:

schema.table. - If two periods are present, the keyword will be compared to the

schema, table, and column name:

schema.table.column. - Literal periods (

.) and backslashes (\) in names can be escaped with a preceding backslash. - The keyword will be matched case-insensitively against the data-dictionary representations of schema/table/column names.

- The

*wildcard is also supported, for example you can discover all columns in a specific table by specifyingschema_name.table_name.* - Only alphanumeric characters, spaces, underscores, hyphens, periods, asterisk wildcards, and escaping backslashes are allowed.

- Regular expression format:

- A keyword prefixed with

regex:will be treated as a regular expression over a fullschema_name.table_name.column_namestring. - The regex will be matched case-insensitively against the data-dictionary representations of schema/table/column names.

- Any backslashes or periods in schema/table/column data-dictionary names will be prefixed by a backslash in the string to be matched by the regex.

- For more details on regular expressions, see: Common regular expression patterns.

- A keyword prefixed with

For example, with the ignored keyword p_id, columns named p_id will be ignored, and will be no longer identified as sensitive data.

Appendix: Built-in data discovery keywords

DataMasque uses regular expressions to search for columns which may contain the following sensitive information:

Category PII

- Name / first name / middle name / last name / surname / fName / mName / lName

- Fax number

- Mail / email

- Date of birth / DoB

- SSN / Social Security Number

- Address

- Post code

- Phone

- Insurance number

- Passport number

- Driver license number

- Country / state / city / zip code

- Gender

- Age

- Vehicle identification number / VIN

- Login

- Media access control / MAC

- Job position / role / title

- Workspace / company

- NRIC / Identity Card Number

- IC number

- ID number

- IRD number / Inland Revenue Department number

- NINO

- Unique taxpayer reference / UTR

- Identity / identification / tax number / ID

- Internet protocol address / IP address

- Licence plate

- Licence number

- Certificate number

- Identifiers / serial number

Category PCI

- Credit / payment/ debit card

- Credit / payment / debit number

- Account number

- Security code

- Expiry date

- Name / first name / middle name / last name / surname / fName / mName / lName

- PIN / Personal identification numbers

- CVV / Card Verification Value

- Address

- Country / state / city / zip code

Category PHI

- PHI number

- NHI number

- Medical record number

- Insurance number

- Internet protocol address / IP address

- Name / first name / middle name / last name / surname / fName / mName / lName

- Health plan beneficiary number

- Identifiers / serial number

- Identifying number / code

- Licence plate

- Licence number

- Certificate number

- Address

- Country / state / city / zip code